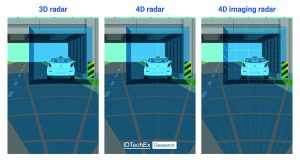

Automotive radar has been described as one of the most significant additions to vehicles in the past two decades. In a 3D form, measuring azimuth (horizontal angle) distance and velocity, radar is used in cruise control and automatic emergency braking systems in advanced driver assistance systems (ADAS). As Safety Level 3 vehicles enter the market, radar has progressed to 4D, measuring elevation direction to detect how high an object is from the ground to determine if it is a kerbstone or a pedestrian.

Automotive radar has been described as one of the most significant additions to vehicles in the past two decades. In a 3D form, measuring azimuth (horizontal angle) distance and velocity, radar is used in cruise control and automatic emergency braking systems in advanced driver assistance systems (ADAS). As Safety Level 3 vehicles enter the market, radar has progressed to 4D, measuring elevation direction to detect how high an object is from the ground to determine if it is a kerbstone or a pedestrian.

“Imaging radar should have sufficient resolution to distinguish small obstacles at long distances, for instance a person on the road at 100m,” says Dr James Jeffs, senior technology analyst at IDTechEx. “Assuming that the person is 5-6ft tall, a resolution of around 1° would be needed to separate the person from the road. In this scenario, the system would have enough time to activate the brakes and bring the vehicle to a stop, avoiding a collision, even at highway speeds,” he says.

NXP Semiconductors announced an extension to its 28nm RF cmos radar one-chip SoC family at CES in Las Vegas. The SAF86xx supports a range of sensor outputs, including object, point cloud-, or range-FFT-level data for smart sensors in today’s architectures and streaming sensors in future distributed architectures.

It targets software-defined vehicle architecture for ADAS rather than individual sensors and supports SAE Level 2 and Level 3 advanced comfort features such as hybrid pilot operation, automated parking and urban pilot operation.

NXP has collaborated with automotive radar software startup Zendar to develop high-resolution radar systems for automotive applications based on its Distributed Aperture Radar (DAR) technology. This enhances the resolution of radar systems and eliminates the need for thousands of antenna channels by fusing information from a vehicle’s multiple radar sensors to create a single, larger antenna. The result is high-angular resolution below 0.5° for lidar-like performance to map an area. Conventional radar sensors operate between 2° and 4°.

NXP has collaborated with automotive radar software startup Zendar to develop high-resolution radar systems for automotive applications based on its Distributed Aperture Radar (DAR) technology. This enhances the resolution of radar systems and eliminates the need for thousands of antenna channels by fusing information from a vehicle’s multiple radar sensors to create a single, larger antenna. The result is high-angular resolution below 0.5° for lidar-like performance to map an area. Conventional radar sensors operate between 2° and 4°.

DAR solutions will be based on NXP’s S32R radar processor platform and RFCMOS SAF8x SoCs. In addition to simplified standard radar with reduced thermal complexity, the DAR footprint is smaller than conventional radar.

Radar target simulator

To verify the SAF86xx, NXP collaborated with Rohde & Schwarz using its radar target simulator.

The two companies conducted tests to verify the reference design using the R&S AREG800 automotive radar echo generator with the R&S QAT100 antenna mmW front end for short distance object simulation, RF performance and signal processing.

The two companies conducted tests to verify the reference design using the R&S AREG800 automotive radar echo generator with the R&S QAT100 antenna mmW front end for short distance object simulation, RF performance and signal processing.

The radar sensor reference design can be used for short-, medium- and long-range radar applications for New Car Assessment Program safety requirements as well as L2 and L3 comfort functions.

The test system characterises radar sensors and radar echo generation with object distances down to the airgap value of the radar under test. It is suitable for the whole automotive radar lifecycle, including development lab, hardware-in-the-loop, vehicle-in-the-loop, validation and production application requirements. It is scalable and can emulate the most complex traffic scenarios for ADAS, says Rohde & Schwarz.

Sensing systems

More mmWave radar sensor technology was demonstrated by TI as it introduced the AWR2544 mmWave radar sensor chip, claiming it as a first for satellite radar architectures. MulticoreWare and Imagination also demonstrated GPU compute on TI’s TDA4VM processor, adding around 50 GFLOPS of extra compute and demonstrating improvement in the performance of common workloads used for ADAS.

Another collaboration was between Eyeris, Omnivision and Leopard Imaging. This trio has developed a production reference design for in-cabin sensing. The Eyeris’ monocular 3D sensing AI software algorithm is integrated into Leopard Imaging’s 5MP backside illuminated global shutter camera module, which uses Omnivision’s OX05B sensor and OAX4600 image signal processor.

Eyeris’ monocular 3D sensing AI enables any 2D image sensor, including RGB-IR sensors, to provide depth-aware whole-cabin sensing including driver monitoring system and occupant monitoring system data. Omnivision’s OX05B 5MP RGB-IR image sensor and OAX4600 ISP process the monocular 3D sensing AI data.

AI engines

One direction for the automotive industry is the integration of AI to deliver the safety and security features of autonomous models. Manufacturers will integrate autonomous vehicle applications to differentiate vehicles in a competitive marketplace. These applications will rely heavily on AI, advises James Hodgson, research director at ABI Research, requiring compute platforms that will deliver power and efficient AI compute.

“The number of highly automated vehicles shipping each year is set to grow at a CAGR of 41% between 2024 and 2030, signalling a healthy growth opportunity for suppliers of heterogenous SoCs with powerful and efficient AI compute,” he says.

AMD launched the Versal AI Edge XA adaptive SoC, the company’s first 7nm device to be automotive-qualified. It is designed for use as an AI engine in forward cameras, in-cabin monitoring, lidar, 4D radar, surround view, automated parking and autonomous driving systems. The SoC includes an AI engine for AI inference on data for use in edge sensors such as lidar, radar and cameras as well as in a centralised domain controller. The AI engines are capable of classification and feature tracking. The series ranges from 20k-521k LUTs and from 5TOPS-171TOPS.

AMD launched the Versal AI Edge XA adaptive SoC, the company’s first 7nm device to be automotive-qualified. It is designed for use as an AI engine in forward cameras, in-cabin monitoring, lidar, 4D radar, surround view, automated parking and autonomous driving systems. The SoC includes an AI engine for AI inference on data for use in edge sensors such as lidar, radar and cameras as well as in a centralised domain controller. The AI engines are capable of classification and feature tracking. The series ranges from 20k-521k LUTs and from 5TOPS-171TOPS.

The scalable SoCs can be ported using the same tools as earlier Versal adaptive SoCs. The initial releases are expected early this year, with more to be released later in 2024.

AMD also introduced the Ryzen Embedded V2000A series processor for use in a digital cockpit, from the infotainment console to the digital cluster and passenger displays. The x86 auto-qualified processor family is the company’s response to consumer expectations for in-vehicle experiences for connectivity, entertainment and workplace use. It says the processor brings a PC-like experience to in-vehicle entertainment.

This latest Ryzen Embedded processor is built on 7nm process technology and uses Zen 2 core and Radeon Vega 7 graphics. In addition to HD graphics for digital cockpit representations or passenger screens, it provides security features and enables automotive software through hypervisors. It supports Automotive Grade Linux and Android Automotive.

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News