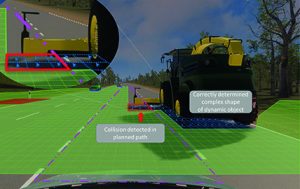

Figure 1: Typical classification-based perception uses simple object models (green bounding box). Unknown object extensions such as the harvester tool might be ignored and could lead to collisions in the planned path (magenta)

The safe automation of commercial vehicles is a tremendous request from the market. Business cases appear attractive and technical issues seem solvable, yet still, ensuring the safety of such highly automated vehicles is challenging.

Designers have to consider the safety aspects of typical perception and planning stacks for autonomous trucks operating in logistic hubs. One proposal is a complementary safety channel to address the weaknesses of currently used algorithms. This safety channel explicitly handles unknown and hazardous objects and ensures that the vehicle never collides with any of them.

Reducing complexity

When asked about technical feasibility, intra-hub automation companies probably say that autonomous operation can be achieved because there is a high degree of control over the operational design domain. This allows operators to limit the supported scenarios drastically compared to vehicles driving on public roads.

On an abstract level, it seems feasible that reducing the number of supported scenarios helps boil down the system’s complexity, but what drives this complexity?

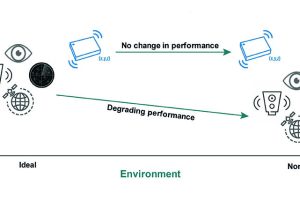

Despite numerous other technical challenges, it is the perception that still gives developers and managers sleepless nights. When driving on public roads, close to zero false alarm rates is the mandatory level for both safety and passenger acceptance. With a given sensor set, however, reducing the false alarm rate also reduces the detection rate – and vice versa. OEMs prefer to reduce detection rates in favour of low false alarm rates to reduce costs for ADAS (advanced driver assistance system) applications. Following the definition of supervised automation, or L2, the driver compensates for reduced detection rates as they remain responsible for the car at any time. This detection rate reduction makes ADAS applications possible from both a technical and cost perspective.

For unsupervised autonomous driving (≥L3), it is not an option to reduce the detection rate because the driver will no longer be responsible for ensuring safe driving. Instead, the system has to detect all objects. At the same time, the system must not trigger any false alarms, as this can pose an additional safety risk. This combination of the highest detection rates and lowest number of false alarms makes it so hard to develop unsupervised automation for vehicles driving on public roads.

Fenced areas, such as logistic hubs, offer new automation options by lowering some perception requirements compared to autonomous driving on public roads. In particular, a moderate increase in false alarms still allows for a business case. If an automated vehicle mistakenly detects a non-existing object, it can safely stop and let a human operator clear the situation, for example, via remote operation. Additionally, detection rate requirements might be lowered due to the strict control over the operational design domain, for example, the usage of the automated area might be limited for humans.

Classification-based perception

Currently, most ADAS applications rely on perception stacks based on classifying objects. If a camera knows what type or class of object a region in an image represents, it can infer the object’s distance, size and speed from that region or bounding box. If the object type is not known to the AI of the camera, there is no guarantee that such an unknown object will be detected. In other words, if the object type was not present in the training data, the system will ‘miss’ the object. While the training data sets are continuously extended even the most extensive datasets cannot ensure to contain all the different object types that could appear on the road.

Typical perception systems use specific representations or models for known object types, such as bounding boxes. These representations are efficient as they require small bandwidth and are highly suitable for function development. If the object does not entirely follow the designed representation and has, for instance, an unexpected extension, the perception may still try to fit the object into that representation (Figure 1).

For supervised automation or ADAS applications, it is often sufficient to implicitly derive free space from a camera, that is, if there is an object, assume the space between the sensor and this object to be free.

For unsupervised automation, this poses a risk of missing relevant objects. Note that the sensor data, like a camera image, contains information about the (unknown) object. It is the AI-based perception that may struggle in extracting that information and thus provides unsafe free space. From sensors that provide direct range measurements, for example, radar or lidar, it is possible to derive explicit free space between the sensor and the detected objects without an AI being involved. Path planning uses this free space to determine a safe trajectory. To obtain up-to-date and consistent free space, other traffic participants’ velocity and driving direction are required in addition to their positions. Classification-based perception systems derive the velocity information by tracking objects over time. Again, the result from the classification is used for this, which is not a safe option for unsupervised automation.

To summarise, safe path planning requires considering known and unknown objects, whether static or dynamic, with arbitrary geometric shapes and free space. The perception must not solely rely on object classification due to its detection limitations. Especially when used in fenced areas such as intra-hub logistic centres, data dependency during development should be small due to the lack of massive fleet data.

Occupancy grids

When looking at whether a planned path is safe, the class of an object turns out to be irrelevant in the first place. It is instead most important to determine whether a potentially harmful object occupies a particular region at a specific time. The concept of occupancy is part of so-called occupancy grid algorithms that are well established. In its classical variant, occupancy grids are well-suited for static environments, but introduce outdated free space and inconsistencies between static and dynamic objects in dynamic environments. Dynamic occupancy grid approaches resolve these issues by determining static and dynamic objects as well as safe free space in an integrated fashion (Figure 2). They can integrate data from radar, lidar and camera sensors and require small amounts of data for parameterisation. One example is the Baselabs Dynamic Grid for typical automotive CPUs such as the Arm Cortex-78AE, whose underlying white box library can be configured for various sensor set ups, including radar, lidar and camera. The system targets ASIL B and complements safety architectures for collision-free driving.

Figure 2: The safety channel, including the dynamic grid approach, determines a potential collision (red) with an unknown dynamic object in the planned path

Due to the properties of dynamic occupancy grid approaches, safety architectures can be built that include diverse redundancy to ensure collision-free automated driving. In such architectures, AI-based perception still extracts relevant objects based on classification, and path planning uses its results to determine a trajectory. This trajectory is treated as a proposal trajectory that is checked for potential collisions by the dynamic grid. As the grid divides the world in cells with cell sizes typically varying from 50mm to 250mm, it can precisely check for collisions with any object type having arbitrary shapes. With its integrated velocity inference, it consistently represents the dynamics of other traffic participants and allows for predicting objects and free space.

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News