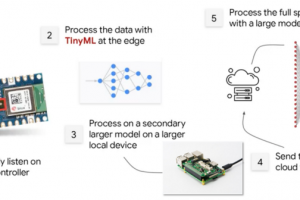

Back in May, Google initially released MediaPipe for Android, web, and Python (‘MediaPipe Solutions for On-Device Machine Learning’, to give it its full name). The tools aim to provide “no-code to low-code solutions to common on-device machine learning tasks”.

In addition to the latest update for the Python SDK to enable Raspberry Pi support, it has also introduced an initial version of the iOS SDK.

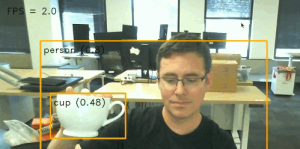

You can see example code and configurations for a Raspberry Pi project on the Google website. Specifically, it looks at object detection with a Raspberry Pi, using OpenCV (a real-time computer vision library) and NumPy(the Python matrices library). There is also an example for Text Classification for iOS.

You can read more on the Google website.

Media Pipe

Functionality includes Face Landmarker (detecting facial landmarks and blendshapes to determine human facial expressions, such as smiling), Image Segmenter (to divide images into regions based on predefined categories, to identify humans or multiple objects then apply visual effects such as background blurring) and Interactive Segmenter (taking the region of interest in an image, estimating the boundaries of an object at that location, and returning the segmentation for the object as image data).

Functionality includes Face Landmarker (detecting facial landmarks and blendshapes to determine human facial expressions, such as smiling), Image Segmenter (to divide images into regions based on predefined categories, to identify humans or multiple objects then apply visual effects such as background blurring) and Interactive Segmenter (taking the region of interest in an image, estimating the boundaries of an object at that location, and returning the segmentation for the object as image data).

The collection of tools was introduced at Google I/O 2023, consisting of three elements: Studio, Tasks, and Model Maker.

See also: Flutter by the Holobooth for Web-based TensorFlow integration

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News