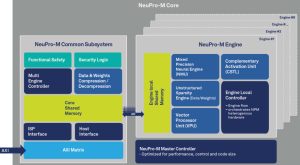

“NeuPro-M NPU architecture and tools have been redesigned to support transformer networks in addition to convolutional neural networks,” according to the company. “This enables applications leveraging the capabilities of generative and classic AI to be developed and run on the NeuPro-M NPU.”

The new IPs are NPM12, a 64Top/s dual-engine core, and NPM14, a 128Top/s quad-engine.

They join the single engine 32Top/s NPM11 and the octo-engine 256Top/s NPM18.

It is the transformer support that is aimed at generative AI algorithms.

“Transformer-based networks that drive generative AI require a massive increase in compute and memory resources, which calls for new approaches and optimized processing architectures,” said Ceva general manager Ran Snir.

On top of this, BF16 and FP8 data-types have been added to reduce memory bandwidth, as has support for ‘true sparsity’, and parallel data and weighs compression, said the company, and the number of AI networks supported out-of-the-box has gone from 50 to 100.

Partly due to architectural changes, and partly from a move from 7nm to 3nm process simulation, power efficiency has been boosted from the January 2022 figure of 24Top/s/W, to 350Top/s/W now – the latter, said Ceva, is capable of processing more than 1.5 million token/s/W for a transformer-based LLM inferencing.

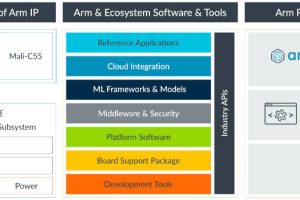

“New tools in the AI software stack, like the architecture planner tool, allow accurate neural network development and prototyping to ensure final product performance,” said Ceva. Its CDNN neural network compiler has a memory manager for memory bandwidth reduction and load balancing algorithms, and is compatible with open-source frameworks including TVM and ONNX.

Applications are foreseen in: communication gateways, optically connected networks, cars, notebooks, tablets, AR and VR headsets and smartphones.

The single-engine NPM11 is available now, with NPM12, NPM14 and NPM18 “available for lead customers”, said Ceva, whose NPM1x web page can be found here

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News