Varjo combines video-see-through (VST) and mixed reality to deliver a sharp resolution without affecting processing performance

Of all the first-world problems, nausea while using a virtual reality (VR) headset ranks highly. It affects more women than men, with a recent survey estimating that four times as many women as men are feeling queasy.

Reasons for this variation are unclear, but Finnish company Varjo has created a headset that combines VR with mixed reality (XR) which can reduce the head movements that can induce nausea while enhancing augmented reality (AR) views, removing the transparency of objects that are superimposed on the real-world background.

Human frailty

A VR headset display offers a far higher resolution than a standard display, but the human eye is the flaw in the design. It can only focus on a 2° field of view in the centre of an image, providing colour and sharp focus in that area. The brain ‘fills in’ the rest of the detail.

The eye’s low resolution means images look pixelated in the 100° view of VR, creating a ‘black grit’ effect that detracts from the user’s view of the real world.

For Markus Mierse, senior director for graphics solutions at Socionext, there are two major challenges in nausea-free headset design. They are the camera processing in the headset itself and the transfer of the picture to the PC. “The rest of the processing is standard,” he told Electronics Weekly.

The Varjo XR and video-see-through (VST) headset uses two displays, a background display with standard high definition (HD) resolution, and a centre display that tracks like the human eye and which adjusts to focus where the user is looking, to provide a sharp image in the centre of the view. The headset display visualises images at 70Mpixels

Conventional AR – when a computer-generated image is superimposed on the user’s real world view – is very small and with boundaries for the display which means, for example, that when looking at a large object, the headset wearer has to move their head to see all of the object’s boundaries. It is also ‘transparent’ so an object superimposed does not completely block the view, instead the background can dimly appear through the object, like a phantom view.

To counter this, Varjo uses cameras in AR to take pictures, uses a graphics card to read these pictures and complete them, by rendering augmented objects on to this view, and send complete images back to the headset.

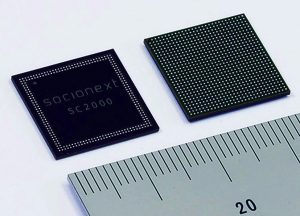

The headset uses an SoC from Socionext – the Milbeaut SC2000 image signal processor (ISP) to replace pixels, correct colour and white balance, perform noise reduction and correct defective pixels in the image pipeline before sending the image to the headset.

This has to be done in real time, as low latency is essential in VR/XR applications, which can include training and design, as well as gaming. The ISP is based on an Arm Cortex A7 Quad 650MHz processor.

Two 4k cameras feed a live picture from the outside world to the two displays in the headset via a powerful video pipeline. Each eye has to process very high resolution images spread across these and the background display. A tracking mechanism tells the ISP to send a section of the image to the PC. They process very large amounts of data in a relatively short time, says Mierse.

Processor power

The Socionext Milbeaut SC2000 SoC combines a DSP for computer vision with image stabilisation for high resolution images

The ISP is capable of processing up to 1.2Gpixel per second, which is three times faster than the previous processor from the company. The power consumption is also lower in this iteration, at just 1.7W in typical operating conditions. For this project, latency time is essential – every microsecond delay could lead to the sickness effect resulting from the movement of the head.

The ISPs work in a synchronised way, sending slices of the image to the PC, rather than waiting for the whole image. They take the background image, says Mierse, and adjust the focus quickly to meet eye movements. Both images from each camera are sent to the PC.

At the same time, the ISPs perform image correction in the pipeline, for example, to match white balance for both images in real time.

The ISPs are fine-pitch ball grid arrays (BGAs) with stacked DRAM to achieve a compact 15x15mm package that can be placed close to the sensors in the headset.

Mierse predicts that the speed and resolution achieved in the headset using its processors will change existing VR/XR applications and introduce new ones.

“For example, in retail, objects can be properly placed in the customer’s environment, or architectural companies can use VR/XR to see how the inside as well as the outside of a building will look, and the impact of any changes. Today, with VR you can see a wall, but not in detail. This improved resolution will mean that every detail and light effect can be seen.”

“For training and simulation this is an interesting app,” he continued. “Pilots can see the make-up of the cockpit and have feedback and a realistic outside view in a training environment.”

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News