Figure 1: A TRNG generates a unique DAC private key for a Matter device

Thread and Wi-Fi protocols provide secure and encrypted wireless communication between devices. The security features of these protocols, however, protect only the transmission channels and the data flowing in them, they do not protect the end devices against bad actors. To address this challenge silicon and smart home device manufacturers collaborated to develop Matter, an application-level protocol designed to be a unifying standard that provides interoperability across smart home ecosystems such as Amazon, Apple and Google.

Matter-enabled devices can communicate with each other regardless of ecosystem. An additional advantage is that Matter is implemented at the application layer, which can incorporate device-level security features.

Device identity

Matter defines a hierarchical certificate structure that must be followed to authenticate the device as legitimate, with each certificate being signed by a higher level certificate in the hierarchy. The specification states: “All commissionable Matter nodes shall include a Device Attestation Certificate (DAC) and corresponding private key, unique to that device.” An effective way to create the DAC private key is by using a true random number generator (TRNG). The underlying silicon component in the Matter device (for example, a smart lightbulb) can generate a random number using a physical entropy source (Figure 1). This is provided as input to the TRNG function, which then generates a random number that can, in turn, be used by the key generator function to derive a unique DAC private key for the Matter device. Using a high entropy TRNG is critical because it provides the device with a unique identity and makes it difficult for bad actors to create counterfeit devices by reverse engineering the private key from its corresponding public key.

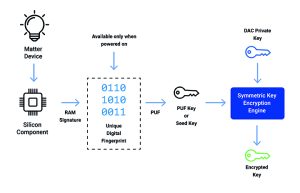

Protected key storage

The Matter specification states: “Devices should protect the confidentiality of attestation (DAC) private keys” and “Nodes should protect the confidentiality of node operational private keys.” There are several options for secure key storage. One is to create memory cells hidden in a security subsystem, but this requires using expensive silicon real estate. An alternative method is to use an integrated circuit-based physically unclonable function (PUF) to create a device-specific PUF key (Figure 2). This can then encrypt all key material (including the DAC private key) using more cost-effective standard memory.

Figure 2: PUF can be used to store keys securely

A PUF is a physical structure embedded in an IC based on unique micro- or nano-scale device properties caused by variations in the manufacturing process, making it very difficult to clone. SRAM PUF based on standard components is the most widely known. An SRAM cell contains six transistors – two cross-coupled inverters and two additional transistors for access. When a voltage is applied to a memory cell it initialises in either a 1 or 0 state, depending on the threshold voltages of the transistors in the two cross-coupled inverters. If an array of sram cells is large enough (between 1,000 and 2,000), the random pattern of 1s and 0s, which appear at power up, can act as a device-unique ‘fingerprint’ for that integrated circuit. This can be used to create a symmetric root key called the PUF key, which in turn is used to encrypt keys that require higher levels of security storage (for example, DAC private key). Each piece of silicon recreates its own PUF key each time it is powered up, making it almost impossible for an intruder to discover the PUF key.

An essential feature of PUF is that the fingerprint is stored in memory only while power is applied to a device, eliminating the problem of how to store the encryption key. This protects devices from all logical and physical attacks attempting to read keys. An additional advantage is that it provides almost unlimited secure key storage, as the encrypted key can be stored on external storage devices without the danger of compromising the private key.

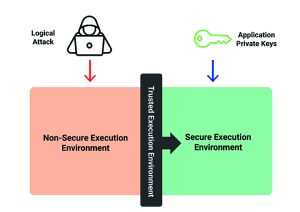

Figure 3: A TEE can store application private keys

A trusted execution environment (TEE) security toolset is an alternative way to protect private keys on a Matter device. A TEE can be used to partition the hardware and software elements of an SoC into secure and non-secure execution environments. Hardware barriers prevent the non-secure environment from accessing the secure one. The barrier prevents physical access to the memory and system controls and also limits the amount of state switching. The non-secure execution environment typically runs processes and applications with larger attack surfaces and is more prone to remote attacks. A properly executed TEE offers robust protection against logical attacks for software-stored private keys in the secure area, but does not guard against physical attacks.

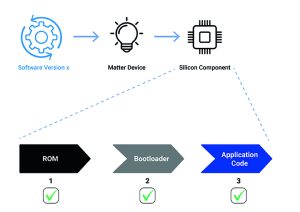

Image encryption and updates

Image encryption and over-the-air updates are critical requirements in the Matter specification. They ensure that end devices execute only authentic code and software can be remotely uploaded to them securely. Matter device manufacturers must be able to guarantee that the software running on a device is authentic. A second challenge is that they must additionally ensure that all software updates come from a legitimate source, which requires verifying the authenticity and integrity of the received code. An efficient approach to the first problem is to validate the signature on each piece of code before executing it on the device (Figure 4).

Figure 4: Authenticating firmware using a root of trust

For example, consider the memory of a device to be divided into three pieces, the rom code, the bootloader and the application code. In this example, the rom code could be used to verify the signature of the bootloader code before executing it, and the bootloader code, in turn, could verify the signature of the application code. The question arises about how the rom code can be authenticated. This is done by using a root of trust, an immutable memory running a small piece of fuzz-tested software that should never be rewritten. This acts as a ‘single source of truth’ at the top of the authentication hierarchy. The second problem can be solved by using an over-the-air encryption key to encrypt the software update before it is sent to the device, and then being used to decrypt it on the device, before being allowed to install. This helps secure the communication channel, thereby ensuring that the updates are coming from a legitimate source.

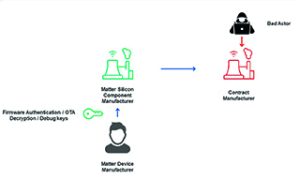

Securing devices during manufacturing

The Matter specification recommends fusing devices during production to prevent bad actors from extracting device information that they could use to create counterfeits. It also suggests device makers lock the debug port or implement trusted anchors for secure boot. However, locking is not desirable because it can restrict debugging. An alternative approach is for device makers to lock a debug port at the manufacturing facility and offer only limited access to a selected group of people.

The first step in restricting debug ports is to create a private-public key pair and store the public key in the device. When a developer requires access to the debug port for debugging, they can sign the debugging command using the corresponding private key before sending it to the device (Figure 5). This signature on the command is verified using the public key before allowing the debugging to proceed. However, while this approach requires restricting access to the hardware device during manufacture, it does not mitigate against scenarios where a device was previously compromised at another stage in the manufacturing process.

Figure 5: Programming at source increases security for Matter-compliant devices

To create secure keys, Matter device manufacturers that want to reduce threats in the outsourced production phase should programme the device, provision device certificates and security keys at a trusted manufacturing source. One recommendation is to have the IC manufacturer perform this so that the manufacturing assets are never exposed (even at the silicon manufacturing site). This approach minimises the possibility of bad actors counterfeiting or cloning devices.

Smart home devices must be as secure as possible to protect end-consumer against privacy breaches. Securing connected mass-market products is complicated as they are used in many different ecosystems. As the Matter protocol gains popularity in the smart home industry, manufacturers can use its additional security features to protect their smart devices and the data flowing between them, easing customers’ security concerns and safeguarding their brand’s reputation.

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News